When your AI visual inspection works perfectly in pilots but starts failing once it hits your shopfloor

If you have already tried AI visual inspection, the pilot phase probably felt reassuring.

The demo worked. The system detected defects. The accuracy numbers looked strong. It felt like you had finally found something that could reduce manual checks and bring consistency to quality inspection.

Then the system moved from the pilot setup to your actual shopfloor.

That is usually when confidence starts to fade. A defect gets missed. Alerts appear when nothing seems wrong. Operators stop paying attention to the screen. Slowly, you begin to question whether the issue is the AI itself, or something deeper.

If this feels familiar, you are not alone. This gap between pilot success and real production performance is one of the most common challenges with AI visual inspection in manufacturing.

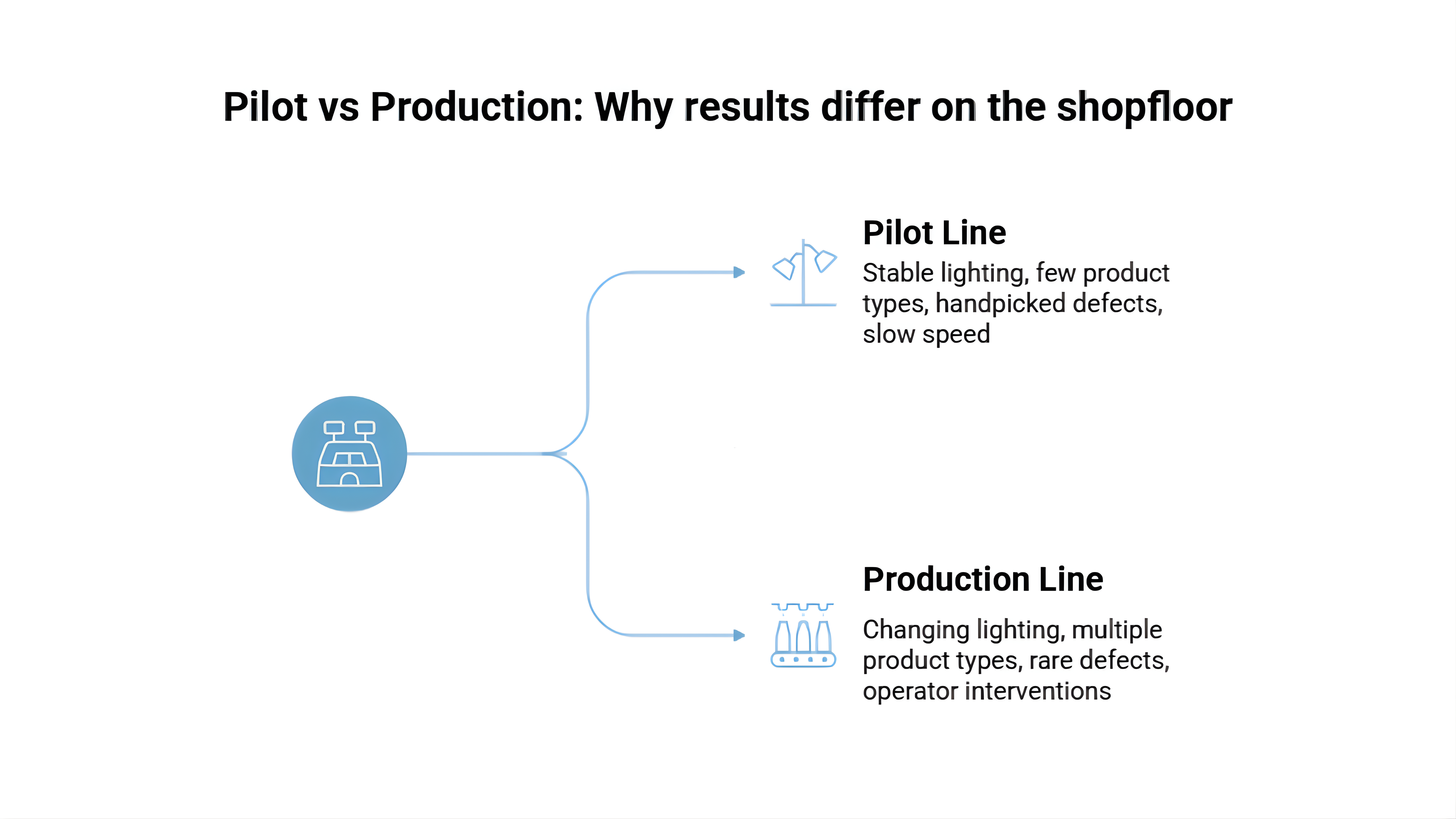

Why pilots feel more reliable than production

Pilots are designed to prove that something can work, not that it will work every single day.

During a pilot, conditions are controlled. Lighting stays stable. Only a limited set of products is tested. Defect samples are carefully selected. The line often runs slower, and everyone involved is closely monitoring the system.

There is nothing wrong with this. Pilots serve an important purpose. But they show the system at its best, not under everyday pressure.

Your shopfloor is very different. It changes constantly, often in small ways that are hard to predict.

What really changes once the system goes live

Once AI visual inspection becomes part of daily production, small variations start to matter.

Lighting changes across shifts. Raw materials look slightly different from batch to batch. Machines wear down, subtly changing how parts appear. New product variants are introduced faster than systems can adapt.

At the same time, real defects are rare and often subtle. Missing even a few can lead to rework, quality issues, or customer complaints. This is where many systems struggle, not because the technology is weak, but because real production is messy.

At this point, AI is no longer running in isolation. It is working alongside operators, production targets, and real business pressure.

Why accuracy numbers stop being useful

This is where frustration usually sets in.

The pilot showed high accuracy, yet production feels unreliable. The problem is that accuracy alone does not reflect what really matters on the shopfloor.

What matters to you is not how often the system is right in general, but what happens when it is wrong. Missing a real defect carries risk. Raising too many false alerts slows the line and causes operators to lose trust.

In real production, you do not care about AI scores. You care about quality, downtime, and whether the system supports your team or gets in their way.

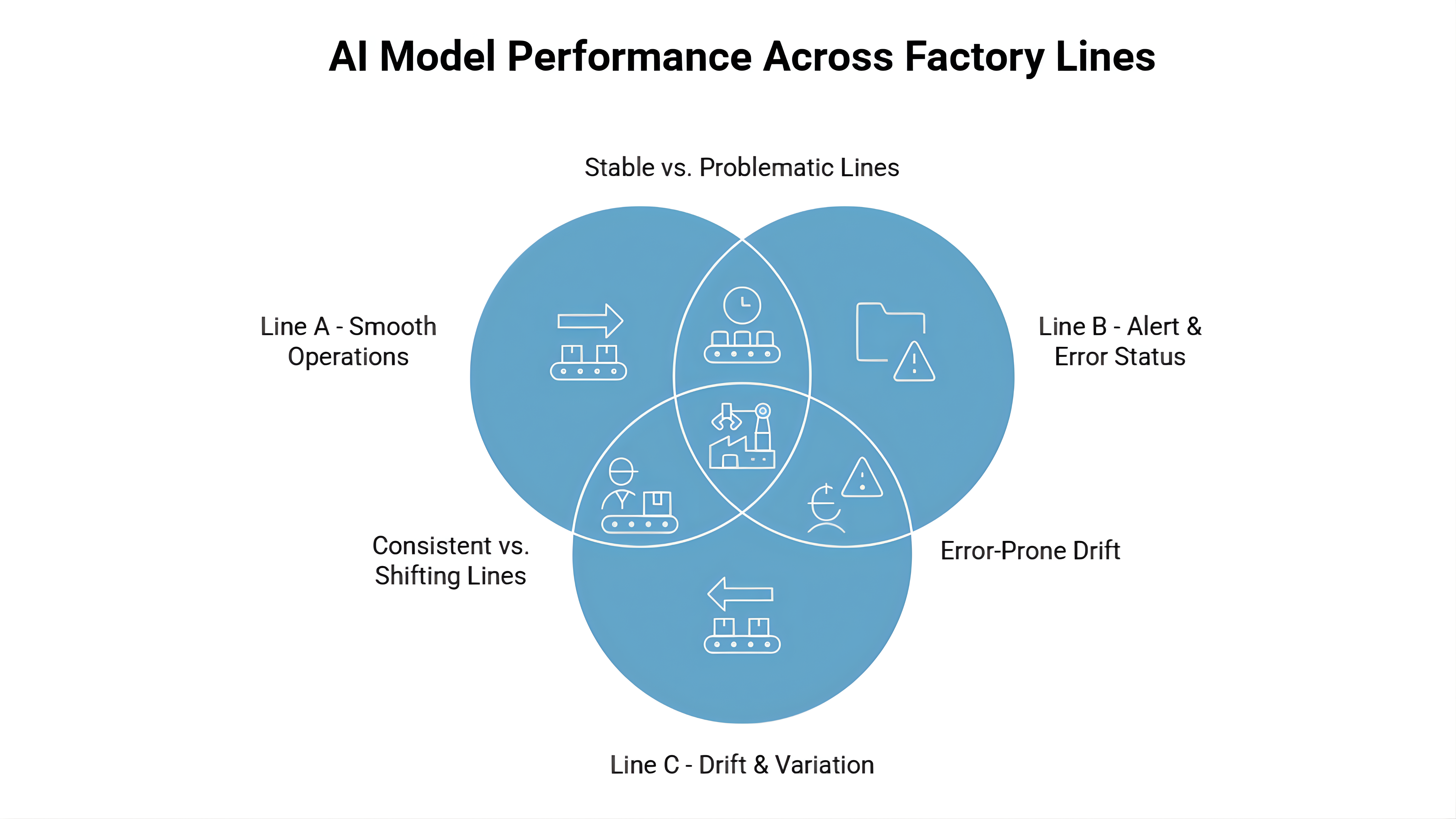

Why scaling across lines and plants is harder than expected

Even if AI works well on one line, that success does not automatically repeat elsewhere.

Different lines behave differently. Different plants have different lighting, layouts, and operating habits. A setup that worked a few months ago can slowly drift as materials or processes change.

This is where many teams get stuck. Instead of scaling smoothly, they spend time constantly fixing and retraining. Over time, the system becomes dependent on a small group of experts, and progress slows.

Scaling is not about repeating what worked once. It is about handling variation without starting over.

What production-ready Vision AI needs to look like

This is where the difference between experiments and real systems becomes clear.

Production-ready Vision AI is built to handle variation, not avoid it. It allows operators to give feedback easily. It shows confidence and trends instead of only pass or fail decisions. Most importantly, it helps people understand what the system is seeing.

Modern Vision AI platforms, such as Seewise, are designed around real shopfloor conditions rather than perfect demos. The focus is on staying reliable as things change, not just performing well once.

Moving from pilots to lasting impact

Pilots help you get started. They show what is possible.

But real value is created after deployment, when the system continues to work during busy shifts, difficult conditions, and unexpected changes.

If you are evaluating AI visual inspection today, the most important question is not whether it looks good in a demo. It is whether it will still work for you months later, on a real line, under real pressure.

And if what you are seeing in production does not match what you were promised during the pilot, it may help to talk through your setup with someone who has seen this pattern before. You can reach out if that conversation would be useful.

The difference between a successful pilot and a reliable production system is not better AI.

It is better design for reality.